Learn how automatic code review improves software quality and accelerates development. Explore tools, best practices, and integration into your CI/CD pipeline.

So, what exactly is automatic code review? At its core, it’s the practice of using specialized software to automatically scan your source code for common problems. Think of it as a vigilant, automated assistant that tirelessly checks for bugs, security vulnerabilities, and style slip-ups, freeing up your developers to focus on what they do best: building great features.

For decades, the gold standard for quality control was manual code review. A developer would finish their work, submit a pull request, and then wait for one or more teammates to painstakingly read through every single line. This human-centric approach is fantastic for catching complex logic flaws and weighing in on high-level architectural choices.

It’s a bit like having a master editor proofread a novel. They spot the subtle plot holes and suggest character development improvements—things a simple spell-checker would completely miss. In the same way, a senior developer’s review provides critical insights that only come with years of experience.

But there’s a catch. This manual process can grind productivity to a halt, creating a major bottleneck. In today’s fast-paced development world, waiting for a human review can stall progress, delay releases, and bog down your most senior engineers with routine, repetitive checks.

This is where automatic code review truly shines. It acts as a high-speed, systematic first line of defense that runs right alongside the developer. Instead of waiting for a human to flag a syntax error, a potential security risk, or a deviation from the team’s coding style, an automated tool catches it instantly.

This immediate feedback loop empowers developers to fix minor issues themselves, long before the code ever lands in front of a human reviewer. It’s important to understand this system doesn’t replace the “master editor”—it supercharges them.

The market is clearly betting on this shift. The demand for higher software quality and tighter security has fueled massive investment in this space. The code reviewing tool market, valued at ****5.3 billion by 2032. This explosive growth shows just how essential these tools have become. You can dive deeper into the numbers in the full code reviewing tool market report.

To really grasp the practical differences, it helps to put the two approaches side-by-side. Each serves a distinct purpose and delivers different results. One prioritizes deep, contextual understanding, while the other is all about speed, consistency, and scale.

Here’s a quick rundown of how they stack up.

This table breaks down the core distinctions between the two methods, showing where each one excels.

Ultimately, the best development teams don’t choose one over the other; they masterfully combine both. Automatic code review lays down a solid foundation, ensuring every piece of code is clean, consistent, and secure from the start. This allows manual reviews to be faster, more focused, and centered on the creative problem-solving that drives real innovation.

To really get what an automatic code review system does, it helps to peek under the hood. This isn’t one single, giant process. It’s more like a team of specialized components working together, each with a specific job to ensure your code is solid and secure. Think of it as an assembly line where your code moves through several expert inspection stations before it gets the green light.

The whole journey kicks off with a foundational layer found in most automated tools: static application security testing, or SAST. This is the first and most critical check.

You can think of SAST as a hyper-vigilant grammar and spell-checker for your code. Before your program is even compiled or run, the static analyzer meticulously reads the source code itself. It’s on the hunt for patterns that signal potential problems, catching everything from simple syntax slip-ups to more complex logical flaws like uninitialized variables or potential null pointer exceptions.

This proactive approach means developers get feedback almost instantly, often right inside their code editor. This lets them squash bugs at the earliest possible moment—which is exactly when they’re the cheapest and easiest to fix.

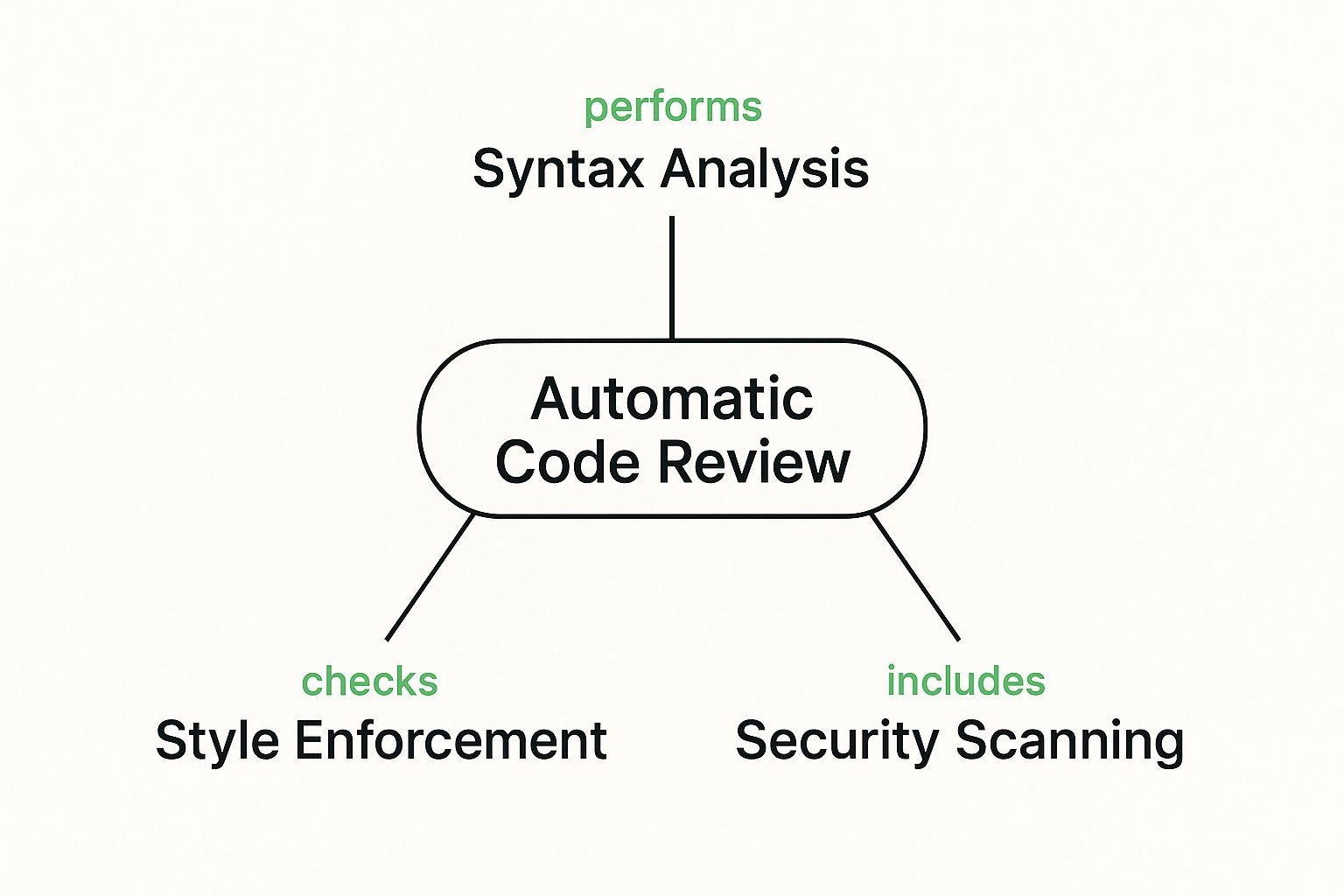

This infographic breaks down the main pillars that hold up an effective automatic code review process.

As you can see, it’s the combination of static analysis, security scanning, and style enforcement that creates a truly comprehensive quality gate.

While static analysis is fantastic for catching general bugs and “code smells,” modern development requires a much sharper focus on security. That’s where dedicated security scanning steps in. If SAST is your grammar checker, think of the security scanner as a highly trained security guard for your codebase.

This component is specifically programmed to hunt for known vulnerabilities and security anti-patterns. It scans your code for common weaknesses, including:

By checking against massive databases of known threats, like the OWASP Top 10, these scanners provide a powerful first line of defense against cyberattacks. They can drastically lower your application’s risk profile before it ever goes live.

Have you ever tried to read a book where every chapter was written in a different font and style? It would be a confusing, exhausting mess. The exact same thing is true for a shared codebase that multiple developers are working on.

This is why automatic code review tools always include components for enforcing code style and formatting. These tools act as the project’s official rulebook, ensuring every line of code follows a predefined set of conventions. We’re talking about everything from indentation and line spacing to how you name your variables and functions.

This consistency isn’t just about making things look pretty. It makes the code significantly easier to read, understand, and maintain for everyone on the team—both now and for years to come.

At the end of the day, you can’t improve what you don’t measure. The best automatic review systems don’t just point out problems; they give you data-driven insights through comprehensive metrics and reporting. These tools often generate dashboards that let you visualize the health of your codebase over time.

This gives managers and team leads a bird’s-eye view of key quality indicators. You can track metrics like:

By analyzing these trends, teams can make smart, informed decisions about where to focus their refactoring efforts, celebrate real quality improvements, and build a culture of continuous improvement that’s backed by cold, hard data.

It’s one thing to understand the mechanics of automatic code review, but the real question is simple: what’s in it for you? The advantages go way beyond just spotting a few mistakes. We’re talking about tangible improvements in quality, speed, and security that you can actually measure. When you bring these tools into your workflow, you see clear value across the entire development cycle.

The most immediate win is a major leap in code quality and consistency. Think of automated scanners as a tireless gatekeeper, catching common bugs, style guide slip-ups, and potential performance hogs on every single commit.

This constant oversight sets a high bar for quality right from the start. Instead of a developer finding out about a simple but widespread issue weeks down the line, the tool flags it instantly. This lets them fix it while the code is still fresh in their mind, which is a much cheaper and faster way to handle bugs.

This might sound backward, but adding an automated step actually makes your developers more productive. You’re offloading the soul-crushing, repetitive work of checking for routine errors, freeing up your most valuable engineers to do what they do best.

Suddenly, your senior developers aren’t stuck picking apart pull requests for inconsistent spacing or a missing semicolon. That time gets funneled back into work that truly matters:

This shift in focus is a huge reason these tools are taking off. The global code review market was valued at around ****1 billion by 2025.

Beyond making code cleaner and developers happier, automated tools are a massive security upgrade. They act as your first line of defense, systematically scanning for known vulnerabilities before they have a chance to sneak into production.

By building security scanners right into the daily workflow, you can spot and fix common threats like SQL injection or outdated dependencies early on. This proactive approach drastically cuts down your organization’s risk and helps you stay compliant with standards like PCI DSS or GDPR. Security stops being a stressful last-minute scramble and becomes just another part of building great software. A good checklist can really help structure this process, something we cover in our ultimate code review checklist.

When you combine all these benefits, the end result is a faster, more dependable CI/CD pipeline. Code that’s cleaner and more secure from the get-go means builds don’t fail as often and deployments run smoother. Your team can ship new features with more confidence and speed, knowing a solid baseline of quality has already been met. That’s the bedrock for scaling any modern software product.

Let’s be honest, traditional automatic code review tools are great, but they’re basically just glorified checklists. They’re incredibly good at following a strict set of rules to catch known problems. While that’s useful, they have a blind spot: they can’t understand context, spot brand-new types of bugs, or figure out what a developer was actually trying to do.

This is where AI doesn’t just improve the process—it completely changes the game.

Instead of just ticking boxes, AI-powered systems are trained on billions of lines of real-world, open-source code. By analyzing this colossal dataset, they learn the subtle patterns, structures, and unspoken conventions that separate good code from great code.

This deeper, contextual understanding allows AI to go far beyond simple syntax. It can hunt down complex bugs that span multiple files, flag tiny performance issues before they become major headaches, and even suggest elegant optimizations that a human reviewer might only spot after a long coffee break and a lot of head-scratching.

The biggest shift we’re seeing is that AI isn’t just reviewing code anymore; it’s actively helping write and improve it. Tools like GitHub Copilot are the perfect example of this new wave, acting as an AI mentor right inside your code editor.

This tight integration completely reshapes a developer’s workflow. An AI assistant can:

This move from passive analysis to active partnership has the market buzzing. The AI code tools sector, which includes automatic code review, hit a value of about ****18.16 billion by 2029, which shows just how much confidence the industry has in AI’s future role. If you want to dive deeper, you can check out this AI code tools market analysis.

Let’s get one thing straight: AI is not here to make human reviewers obsolete. It’s here to augment them. It acts as a powerful assistant, taking on the heavy lifting of deep, time-consuming analysis that often slows development teams down. By offloading this work, human developers are freed up to focus on what they do best.

This hybrid approach makes the entire review process smarter and faster.

When you let AI handle the nitty-gritty technical details, your human experts can elevate their thinking. They can focus on the big picture: making sure the product is not only technically solid but also aligned with business goals, built to last, and a genuine pleasure for people to use.

Picking the right automatic code review tool isn’t as simple as grabbing the one with the most features. The market is packed with fantastic options, but the “best” one for you is the one that slots perfectly into your team’s workflow, tech stack, and budget. It’s less about a long checklist and more about finding the right fit.

Think of it like choosing a vehicle. A sleek sports car is a blast on an open highway, but it’s completely useless on a muddy construction site that demands a heavy-duty truck. In the same way, the perfect tool for a solo developer working on an open-source project is going to look very different from what a massive enterprise with strict compliance needs.

The real trick is to match a tool’s strengths to your team’s specific reality. You have to look at everything from the programming languages you use daily to how and where you deploy your software.

Before you even start looking at brand names, it’s smart to build a checklist of what actually matters to your team. This simple framework helps you cut through the marketing noise and compare tools based on what will truly make a difference in your development cycle.

Here’s what to focus on:

Answering these questions first will point you toward a tool that doesn’t just meet your technical needs but also fits your budget and operational style.

Now that we have our criteria, let’s see how some of the most popular tools on the market measure up against each other. Each one has a slightly different philosophy and excels in different areas, making them better suited for certain types of teams and projects.

The table below gives you a bird’s-eye view, comparing these tools across the key factors we just discussed. This will help you quickly narrow down the options that make the most sense for your development environment.

You can see how different needs lead to different choices. For example, a big bank with tough data privacy regulations would almost certainly choose SonarQube and run it on-premise. This gives them complete control over their source code and the analysis data, which is non-negotiable for meeting compliance rules.

As you explore your options, don’t forget to look for specialized solutions if your stack has unique requirements. For instance, if you’re building on WordPress, it’s worth checking out some of the top WordPress vulnerability scanner tools, as they often catch things that general-purpose analyzers might miss.