Explore 7 real-world system architecture diagram examples. Learn patterns like Microservices and EDA with deep analysis and actionable tips for your projects.

Understanding system architecture is crucial for building scalable, resilient, and maintainable software. While theoretical knowledge is important, analyzing practical examples provides the clearest path to mastering these concepts. This article moves beyond abstract definitions to provide a deep, analytical breakdown of seven essential system architecture diagram examples. Our goal is to equip you with the strategic insights and tactical knowledge needed to design robust systems for your own projects.

Instead of just showing you diagrams, we will deconstruct each one. You will learn the core principles, see how components interact, and understand the specific problems each architectural pattern solves. We will explore the trade-offs, common use cases, and best practices associated with each design. The examples covered range from foundational patterns like Layered Architecture to modern approaches such as Microservices and Serverless.

By the end of this guide, you will have a clear, replicable framework for evaluating, choosing, and implementing the right architecture for your needs. You will not only recognize these patterns but also understand the strategic thinking behind them, enabling you to create more effective and efficient system designs. Let’s dive into the examples.

The Layered (N-Tier) architecture is a foundational pattern that structures an application into horizontal layers, each with a specific role. This is one of the most classic system architecture diagram examples due to its logical separation of concerns. Communication between layers is hierarchical; a layer provides services to the one above it and consumes services from the one below it, typically following a strict “closed layer” approach where requests must pass through each layer sequentially.

This pattern promotes modularity and makes complex systems easier to manage and develop. For instance, a development team can specialize in a specific layer, like the user interface (Presentation Layer), without needing deep expertise in the database (Data Layer). This separation also simplifies maintenance, as changes in one layer, such as a database migration, have a minimal impact on others, provided the interfaces remain consistent.

The primary strategic benefit of this architecture is its clear separation of responsibilities, which directly supports parallel development and simplifies testing. Early e-commerce platforms and many enterprise systems, like CRMs and ERPs, were built on this model because it provides stability and a structured development lifecycle.

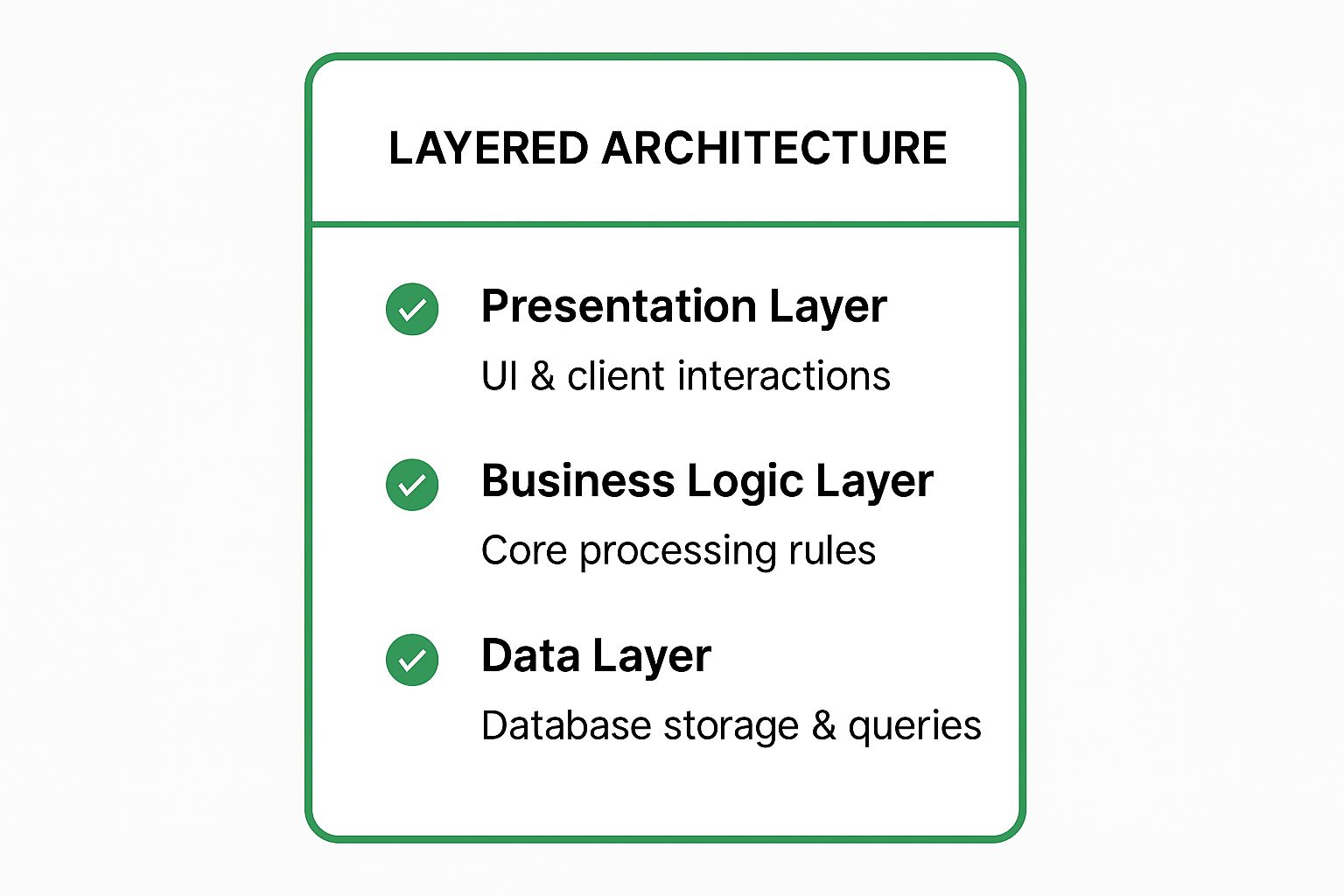

The visual below summarizes the quintessential 3-tier structure, a common implementation of this layered pattern.

This visualization highlights the distinct responsibilities, from user-facing components down to data storage, forming the core logic of the system’s structure. Understanding this flow is crucial for effective system design and documentation.

To effectively implement a layered architecture, focus on maintaining loose coupling between layers. This is critical for long-term scalability and maintenance.

By following these tactics, you can leverage the stability of the layered pattern while mitigating potential performance bottlenecks or development complexities. For more detailed guidance, see how this pattern fits into a comprehensive software architecture documentation template that outlines these components clearly.

Microservices architecture structures a single application as a suite of small, independently deployable services. Each service is built around a specific business capability and communicates with others over lightweight protocols, typically through well-defined APIs. This approach is a stark contrast to the monolithic model, offering greater flexibility and scalability, making it one of the most dynamic system architecture diagram examples for modern, cloud-native applications.

This pattern allows development teams to work autonomously on individual services, leading to faster development cycles and deployment frequencies. Industry giants like Netflix and Amazon championed this model to manage the immense complexity of their platforms. For example, Netflix runs on hundreds of microservices, each handling a distinct function like user authentication, content recommendation, or billing, which allows them to update parts of their system without risking a full system outage.

The core strategic advantage of microservices is organizational and technical agility. By decoupling services, teams can independently develop, deploy, and scale their components. This directly supports a DevOps culture and continuous delivery pipelines. For instance, a feature team at Spotify can update the “Discover Weekly” playlist algorithm without coordinating with the team managing user profiles, as long as the API contract between their services remains intact.

This decentralized approach also enhances resilience. The failure of a single non-critical service does not necessarily bring down the entire application. While it introduces operational complexity, the ability to innovate quickly and scale specific functions based on demand provides a significant competitive edge for large-scale, complex systems like those used by Uber and Twitter.

Successfully implementing a microservices architecture requires a mature approach to distributed systems design and operations. The overhead can be substantial if not managed correctly.

Event-Driven Architecture (EDA) is a model where system components communicate by producing, detecting, and consuming events. An event is a significant change in state, such as a user placing an order or a sensor reading exceeding a threshold. This pattern promotes loose coupling, allowing services to operate asynchronously and independently, making it a powerful choice for modern, scalable system architecture diagram examples. Instead of direct requests, components subscribe to event streams and react when relevant events occur, enabling real-time responsiveness and high resilience.

This asynchronous nature is ideal for systems requiring high scalability and fault tolerance. For example, in an e-commerce platform, placing an order can trigger separate, decoupled events for inventory updates, payment processing, and shipping notifications. If the notification service fails, the core order processing remains unaffected, and the event can be retried later. This decoupling facilitates building complex, distributed systems that are easier to scale and maintain.

The core strategic advantage of EDA is its ability to support highly adaptable and responsive systems. It’s the backbone for use cases like IoT data processing, real-time financial trading platforms, and modern microservices-based applications where services need to react to changes without being tightly bound to one another. LinkedIn’s activity feeds, for example, rely on events to update millions of users in near real-time without creating direct dependencies between user profiles and every action they take.

The diagram below illustrates how producers generate events that are managed by an event broker and consumed by various services.

This visualization shows the central role of the event broker (like Apache Kafka or AWS EventBridge) in decoupling producers from consumers, which is the defining characteristic of EDA. Understanding this flow is key to designing systems that can handle unpredictable workloads and evolve gracefully over time. When modeling this flow, it is important to consider the trade-offs between unidirectional vs bidirectional integration to define how services will communicate and react to events.

To successfully implement an event-driven architecture, focus on event design and robust error handling to ensure system reliability and observability.

Domain.Entity.Action). This makes the system more understandable, easier to debug, and simplifies event discovery for new services.Service-Oriented Architecture (SOA) is a design paradigm where applications are composed of discrete, interoperable services. These services are self-contained units of functionality that can be discovered, accessed, and reused across an organization, often communicating through standardized protocols like SOAP or REST. This approach represents a significant evolution in creating system architecture diagram examples, shifting focus from monolithic applications to a distributed network of business capabilities.

The core principle behind SOA is to build systems from loosely coupled, coarse-grained services that represent specific business functions, such as “Process Payment” or “Check Customer Credit.” This abstraction allows different applications to leverage the same underlying service, promoting reusability and reducing redundant development efforts. Large enterprises, including major banks and government agencies, adopted SOA to integrate disparate legacy systems and create more agile, responsive IT ecosystems.

The strategic value of SOA lies in its ability to align IT infrastructure directly with business processes. By encapsulating business logic into reusable services, organizations can adapt to market changes more quickly. For example, a core banking system built on SOA can expose a “Get Account Balance” service that is consumed by a mobile app, an online portal, and an internal teller application, ensuring consistency and efficiency.

This model was foundational for large-scale integration projects at companies like IBM and Oracle, enabling them to connect complex enterprise resource planning (ERP) and supply chain management platforms. It provides a structured way to manage complexity and foster enterprise-wide asset reuse, making it a powerful tool for digital transformation initiatives in large, established organizations.

Successfully implementing SOA requires robust governance and a long-term strategic vision. Without careful management, it can lead to a complex web of dependencies that is difficult to maintain.

Serverless architecture is a cloud-native design pattern where the cloud provider dynamically manages the allocation and scaling of servers. Applications are built as a collection of functions that are triggered by events, such as an HTTP request or a new file upload. This model abstracts away the underlying infrastructure, allowing developers to focus entirely on writing business logic without worrying about provisioning or managing servers. This makes it a powerful and agile entry in any list of system architecture diagram examples.

This approach, popularized by services like AWS Lambda and Azure Functions, promotes building highly scalable, cost-efficient systems. Because you only pay for the compute time you consume, it can be significantly cheaper for applications with intermittent or unpredictable traffic. For example, Netflix uses serverless functions for its video encoding pipeline, processing files as they arrive, and Airbnb leverages them for its data processing pipelines, scaling resources on demand.

The core strategic advantage of serverless is its operational efficiency and rapid development cycle. It eliminates the need for infrastructure management, reducing operational overhead and allowing teams to deploy new features faster. This is particularly beneficial for startups and enterprises looking to innovate quickly or handle event-driven workloads, like IoT data processing or real-time file manipulation.

Coca-Cola, for instance, used a serverless architecture to process data from its vending machines, handling payments and inventory management without dedicated server maintenance. The event-driven nature of serverless is perfect for such asynchronous tasks, where a trigger (a purchase) initiates a short-lived function. This reactive model is key to its efficiency and strategic value.

To effectively build and maintain a serverless architecture, focus on function design and performance monitoring to avoid common pitfalls like high latency or complex state management.

The Container-Based architecture is a modern approach that packages an application and all its dependencies into a single, lightweight, and portable unit called a container. Containers provide process isolation and manage resources independently while sharing the host operating system’s kernel, making them far more efficient than traditional virtual machines. This pattern is one of the most transformative system architecture diagram examples because it ensures an application runs consistently across different computing environments, from a developer’s laptop to a production cloud server.

This model, popularized by technologies like Docker and orchestration platforms like Kubernetes, addresses the classic “it works on my machine” problem. By encapsulating everything an application needs to run, containers eliminate environmental discrepancies and streamline the development, testing, and deployment pipeline. Major tech companies like Google, Spotify, and even financial institutions such as Capital One have adopted this architecture to build scalable, resilient, and cloud-native services.

The primary strategic benefit of container-based architecture is its unparalleled portability and efficiency, which directly accelerates the CI/CD pipeline and improves resource utilization. It allows teams to break down monolithic applications into smaller, manageable microservices, each running in its own container. This enables independent scaling, development, and deployment of services, fostering agility and innovation.

The visual below illustrates how containers encapsulate application code and dependencies, managed by a container engine on a host OS, providing a clear model for deployment.

This diagram shows the isolation and self-sufficiency of each container, which is the core principle enabling consistent behavior across any environment. Understanding this structure is key to designing robust, scalable, and easily manageable modern systems.

To effectively leverage a container-based architecture, focus on optimizing container images and managing their lifecycle properly. This ensures your system remains performant, secure, and maintainable.

The Hexagonal Architecture, also known as the Ports and Adapters pattern, is designed to create highly decoupled application components. This pattern isolates the core business logic from external concerns like databases, user interfaces, or third-party APIs. It achieves this by defining “ports” (interfaces) for communication and using “adapters” to connect these ports to external technologies, making it one of the most flexible system architecture diagram examples.

This model places the application’s domain logic at its center, completely independent of any external frameworks or tools. All interactions with the outside world, whether driven by a user or an external system, occur through these ports. This structure protects the core logic from changes in technology and infrastructure, enhancing maintainability and long-term viability. For example, a system can switch from a REST API to a GraphQL interface simply by swapping out an adapter, with no changes to the core business rules.

The primary strategic advantage of the Hexagonal Architecture is its unparalleled testability and adaptability. By decoupling business logic from external dependencies, developers can test the core domain in complete isolation, leading to more robust and reliable tests. This pattern is frequently used in systems designed with Domain-Driven Design (DDD), where protecting the integrity of the domain model is paramount.

Financial services core banking systems and e-commerce platforms with multiple payment gateways are prime examples. In these cases, the core logic for processing transactions remains stable while adapters are built for different payment providers (e.g., Stripe, PayPal), user interfaces (web, mobile), or notification systems (email, SMS). This approach allows the system to evolve and integrate with new technologies without costly rewrites of the fundamental business logic.

To successfully implement a Hexagonal Architecture, the focus must be on maintaining a strict boundary between the core application and its external connections. This discipline is key to realizing its benefits.

Navigating the landscape of system architecture is a journey from abstraction to implementation, and the diagrams we’ve explored serve as your essential maps. We’ve moved beyond simple illustrations, delving into the strategic core of seven powerful architectural patterns: from the structured discipline of Layered and SOA designs to the dynamic, decoupled agility of Microservices, Event-Driven, and Serverless models. The detailed analysis of these system architecture diagram examples reveals a crucial truth: a diagram is not merely a picture, but a strategic blueprint that dictates a system’s scalability, resilience, maintainability, and cost-efficiency.

The true value of these examples lies not in replicating them line-for-line, but in internalizing the design principles they embody. Each diagram tells a story of trade-offs, of prioritizing certain capabilities over others, and of aligning technology choices with concrete business goals.

To translate this knowledge into action, focus on these core principles: